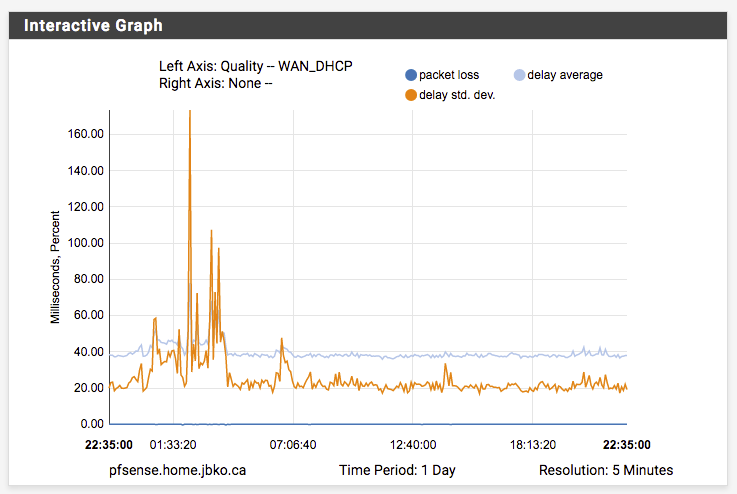

A friend and colleague of mine (Matt) and I have an ongoing discussion about over-specced gear for our home networks. Our core routers have been FW-7540s running pfSense (Atom D525, 4GB RAM, 4 Intel NICs) since 2013. pfSense offers a huge advantage over commercial-grade routers – I run dual WAN with failover based on ping, link, and packet loss, have extremely customizable DNS and DHCP, and can set up an OpenVPN server in just a few minutes. Matt and I also recently have had 500Mbit+ downstream connections installed, so it’d be good to know what hardware and software combination is “for sure” capable of utilizing the full pipe.

There have been a series of excellent articles at Ars Technica this year by Jim Salter that constantly get mentioned in our discussions:

- Numbers don’t lie—it’s time to build your own router

- The Ars guide to building a Linux router from scratch

- The Router rumble: Ars DIY build faces better tests, tougher competition

The first two initial articles were mildly interesting – we do plenty of Linux-based routing at the office, but I don’t really want to build a router from scratch at home if there is a distribution that works as well. The results in Jim’s latest Router rumble article with pfSense 2.3.1 and the homebrew Celeron J1900 were described as “tweaky” and didn’t seem to hold up against the homebrew variant running Linux. I found this a bit odd because FreeBSD is widely assumed to have a hardened, robust and performant network stack; the general impression amongst networking folks I’ve talked to that Linux isn’t quite as good for this use case.

Coming from 2.2, the 2.3 series of pfSense is not exactly everything I’m looking for. I had to ‘factory reset’ the unit shortly after the 2.2 to 2.3 upgrade to avoid firewall rules displaying errors in the web configuration UI. As a personal irritation, the development team also took out the RRD-style graphs and replaced them with a “Monitoring” page, which I am not a fan of.

The Router rumble article, though, tested the UniFi Security Gateway but not the 3-port EdgeRouter Lite, which is my preferred option for users that need more capability than their ISP-provided modem/router combination. Jim did mention that they were both not up to routing gigabit from WAN to LAN, so I figured I’d see if I could replicate the results and if the ERL was any better than the USG.

Configuration and Setup

Following the posts, I configured two machines to act as client and server. Both were booted to Ubuntu 16.04.1 live USB sticks and had ‘apt-get update; apt-get upgrade’ run before any tests were performed. I also had to run “rm -rf /var/lib/apt/lists” to get apt to start working.

- The “client” machine at 192.168. running the test script and the netdata graphing and collection system is a Core i7 4770K, 16GB RAM and a PCI-Express Intel 82574L gigabit network card.

- The “server” machine with nginx and the sample files is a Lenovo X230, Core i5 3320M, 16GB RAM and an onboard Intel 82579LM gigabit NIC.

Some additional changes from the Ars Technica article are more suitable for my configuration and testing. On Ubuntu 16.04, the command to install ab and nginx should be apt-get install apache2-utils nginx (the ‘ab’ package doesn’t exist.) I made the same configuration changes to /etc/nginx/nginx.conf, /etc/default/nginx and /etc/sysctl.conf as suggested in the article:

/etc/nginx/nginx.conf

events {

# The key to high performance - have a lot of connections available

worker_connections 19000;

}

# Each connection needs a filehandle (or 2 if you are proxying)

worker_rlimit_nofile 20000;

http {

# ... existing content

keepalive_requests 0;

# ... existing content

}

/etc/default/nginx

# Note: You may want to look at the following page before setting the ULIMIT. # http://wiki.nginx.org/CoreModule#worker_rlimit_nofile # Set the ulimit variable if you need defaults to change. # Example: ULIMIT="-n 4096" ULIMIT="-n 65535"

/etc/sysctl.conf

kernel.sem = 250 256000 100 1024 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_default = 4194304 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 262144 net.ipv4.tcp_wmem = 262144 262144 262144 net.ipv4.tcp_rmem = 4194304 4194304 4194304 net.ipv4.tcp_max_syn_backlog = 4096 net.ipv4.tcp_mem = 1440715 2027622 3041430

The testing script was modified to use a -s 20 parameter as indicated in the latest article, as well as sleeping for 10 and 20 seconds at appropriate times to distinguish each test run in the graphs:

test.sh

#!/bin/bash mkdir -p ~/tests mkdir -p ~/tests/$1 ulimit -n 100000 ab -rt180 -c10 -s 20 http://192.168.99.101/10K.jpg 2>&1 | tee ~/tests/$1/$1-10K-ab-t180-c10-client-on-LAN.txt; sleep 10 ab -rt180 -c100 -s 20 http://192.168.99.101/10K.jpg 2>&1 | tee ~/tests/$1/$1-10K-ab-t180-c100-client-on-LAN.txt; sleep 10 ab -rt180 -c1000 -s 20 http://192.168.99.101/10K.jpg 2>&1 | tee ~/tests/$1/$1-10K-ab-t180-c1000-client-on-LAN.txt; sleep 10 ab -rt180 -c10000 -s 20 http://192.168.99.101/10K.jpg 2>&1 | tee ~/tests/$1/$1-10K-ab-t180-c10000-client-on-LAN.txt sleep 20 ab -rt180 -c10 -s 20 http://192.168.99.101/100K.jpg 2>&1 | tee ~/tests/$1/$1-100K-ab-t180-c10-client-on-LAN.txt; sleep 10 ab -rt180 -c100 -s 20 http://192.168.99.101/100K.jpg 2>&1 | tee ~/tests/$1/$1-100K-ab-t180-c100-client-on-LAN.txt; sleep 10 ab -rt180 -c1000 -s 20 http://192.168.99.101/100K.jpg 2>&1 | tee ~/tests/$1/$1-100K-ab-t180-c1000-client-on-LAN.txt; sleep 10 ab -rt180 -c10000 -s 20 http://192.168.99.101/100K.jpg 2>&1 | tee ~/tests/$1/$1-100K-ab-t180-c10000-client-on-LAN.txt sleep 20 ab -rt180 -c10 -s 20 http://192.168.99.101/1M.jpg 2>&1 | tee ~/tests/$1/$1-1M-ab-t180-c10-client-on-LAN.txt; sleep 10 ab -rt180 -c100 -s 20 http://192.168.99.101/1M.jpg 2>&1 | tee ~/tests/$1/$1-1M-ab-t180-c100-client-on-LAN.txt; sleep 10 ab -rt180 -c1000 -s 20 http://192.168.99.101/1M.jpg 2>&1 | tee ~/tests/$1/$1-1M-ab-t180-c1000-client-on-LAN.txt; sleep 10 ab -rt180 -c10000 -s 20 http://192.168.99.101/1M.jpg 2>&1 | tee ~/tests/$1/$1-1M-ab-t180-c10000-client-on-LAN.txt

I also generated ‘JPEG’ files with /dev/urandom and placed them in /var/www/html (default nginx directory):

dd if=/dev/urandom of=/var/www/html/10K.jpg bs=1024 count=10 dd if=/dev/urandom of=/var/www/html/100K.jpg bs=1024 count=100 dd if=/dev/urandom of=/var/www/html/1M.jpg bs=1024 count=1024

Finally, installing netdata on the client needed a different set of dependencies (16.04 may have changed some of them):

sudo apt-get install zlib1g-dev uuid-dev libmnl-dev gcc make git autoconf libopts25-dev libopts25 autogen-doc automake pkg-config curl

After cloning the Git repository and running the suggested install steps, you may also need to edit /etc/netdata/netdata.conf and add the following sections (replacing enp5s0 with your network interface from ifconfig) in order to get the same graphs:

/etc/netdata/netdata.conf

[net.enp5s0] enabled = yes [net_packets.enp5s0] enabled = yes

Results

You can download the test runs in a ZIP file, which contains the ‘ab’ output from the tests. Note that some of the graphs show a larger separation between the ab runs with different filesizes; this was due to different ‘sleep’ values being tested in the script.

Direct Connection (Auto MDI-X)

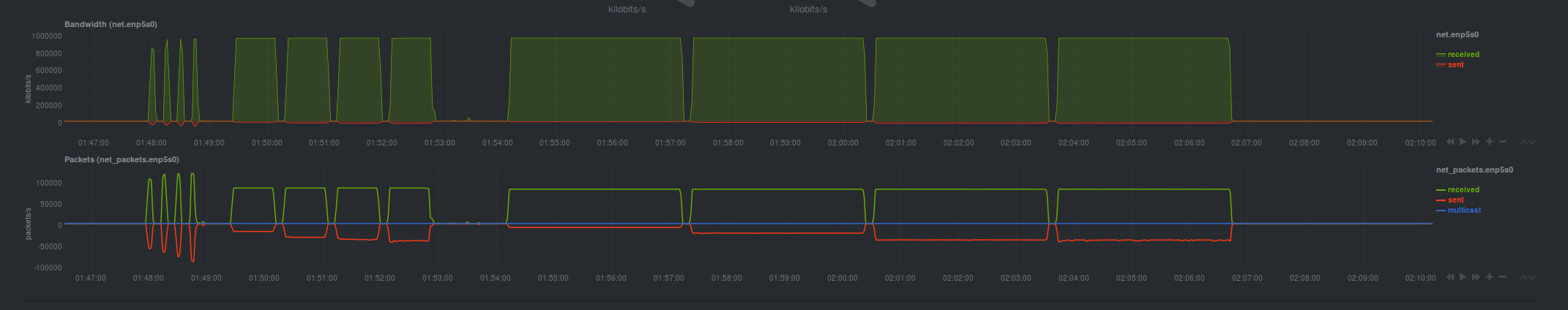

Many NICs support auto MDI-X, which allows a standard Ethernet cable to act like a crossover cable if both network cards support it. I ran a test with the server directly connected to the client and the graph appeared very cleanly.

| Filesize | Average Mbit/s | Total Failed Requests | Notes |

| 10KB | 700.34 | 3117 | 10K concurrency test only resulted in 308Mbit. Failed requests only in 10K concurrency test. |

| 100KB | 785.03 | 3368 | 10K concurrency test only resulted in 417Mbit. Failed requests only in 10K concurrency test. |

| 1MB | 912.16 | 5533 | All tests had a similar speed. Failed requests only in 10K concurrency test. |

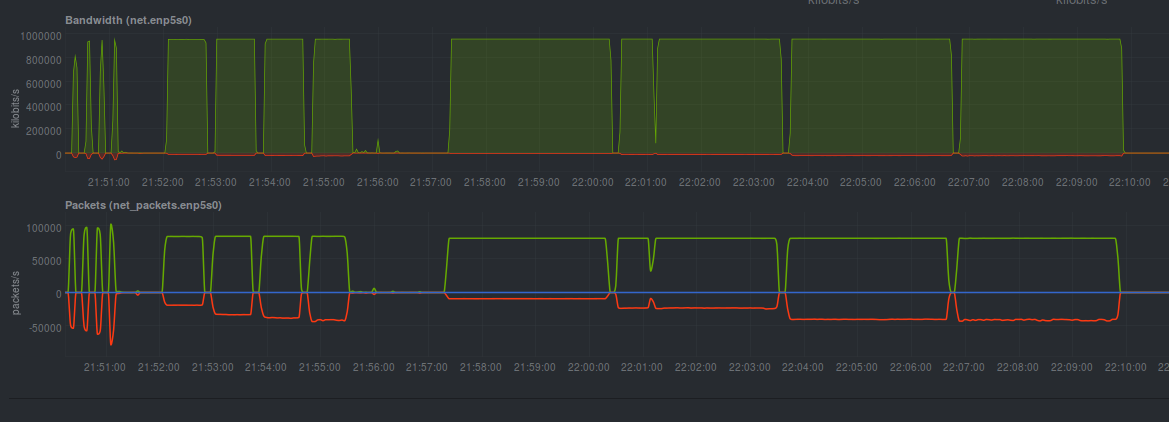

Switched Connection

With both systems connected to a Netgear GS108T switch, the graphs were fairly consistent with one unexplained valley in the 1MB/-c 100 test – but there were no failed requests to nginx noted in the ab results. This seemed to be a fluke; I wasn’t able to reproduce the problem in the exact same spot later. However, the valley did appear during other tests, lending suspicion that the GS108T may be causing a problem.

| Filesize | Average Mbit/s | Total Failed Requests | Notes |

| 10KB | 651.75 | 3939 | 10K concurrency test only resulted in 131Mbit. No failed requests in 10, 100 and 1000 concurrency tests. |

| 100KB | 760.61 | 1085 | 10K concurrency test only resulted in 319Mbit. No failed requests in 10, 100 and 1000 concurrency tests. |

| 1MB | 908.38 | 6690 | All tests had a similar speed. Failed requests only on 1000 and 10K concurrency tests. |

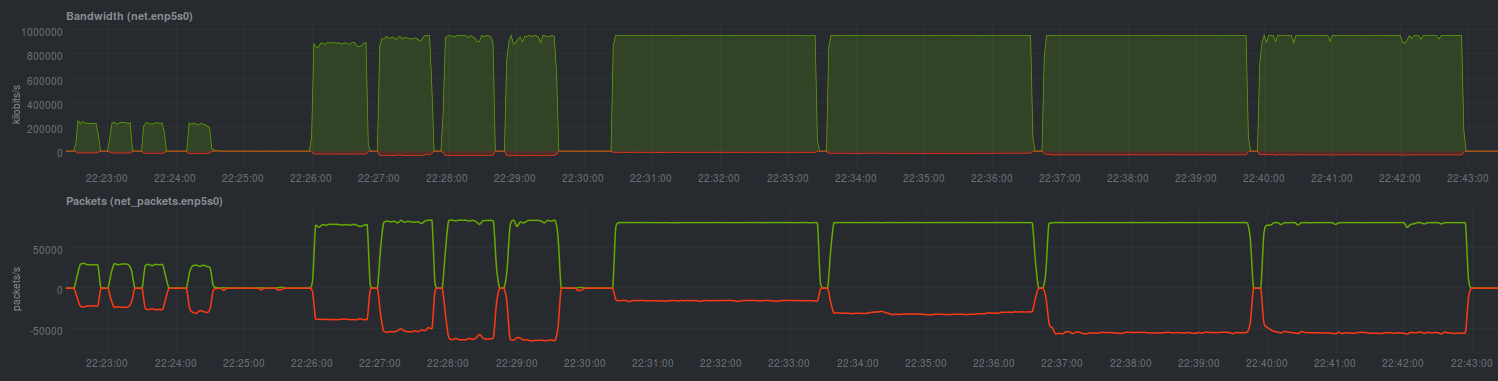

EdgeRouter Lite

The ERL was flashed with 1.9.0 firmware and configured using the “Basic Setup” wizard, which sets configuration back to default values. The eth0 port acts as the WAN interface and provides NAT to the eth1 (LAN) interface. The wizard also configures some default firewall rules. I set up the WAN interface with a static IP of 192.168.99.2, and the laptop at 192.168.99.101 was plugged into eth0. The LAN interface (eth1) had an IP range of 192.168.1.1/24 and provided an IP via DHCP to the desktop. The resulting config.boot file is also available for inspection.

Unfortunately the scale and size of this image is slightly off from the direct switched test, but the peaks and dips in the graph should be sufficient to demonstrate the differences in performance. We can see that the 10KB test is particularly brutal on the EdgeRouter Lite, with speeds topping out at about 215Mbit/s. The 100KB test is slightly better in terms of bandwidth, with the lowest test result at 626.82Mbit, but the top of the graph is not smooth on each test. Finally, the ERL with this firmware pulls out a great performance on the 1MB test, with only the last 10K concurrency run showing a few dips in the graph; the lowest result from ab sits at 904.73Mbit.

| Filesize | Average Mbit/s | Total Failed Requests | Notes |

| 10KB | 153.81 | 55 | 10K concurrency test was especially terrible at 51.25Mbit/s. No failed requests in 10, 100 and 1000 concurrency tests. |

| 100KB | 800.28 | 48 | 10K concurrency test only resulted in 626.82Mbit/s. Failed requests in 1000 (3) and 10K (45) concurrency tests. |

| 1MB | 908.81 | 23723 | 10K concurrency test failed more requests than completed. |

Followup and Further Testing

These test runs raised some additional questions. For now, it convinced me to not immediately run out and get an EdgeRouter Pro, since according to these results, at 100KB to 1MB filesizes I’d still be able to utilize my full download bandwidth on an ERL. What I really need to do is pull my pfSense box out of line and run it through this test scenario to compare it directly to the EdgeRouter Lite and a direct connection.

Performance and Bandwidth

- I am surprised at the performance difference between the Ars tests of the UniFi Security Gateway and the EdgeRouter Lite in this configuration. Since they have similar specs (512MB RAM, promised 1 million packets per second at 64 bytes, promised line rate at >=512 byte packets), I would expect to see similar results. I’m wondering whether the USG was not using Cavium hardware offload support or if there were significant changes in the 1.9.0 firmware from the tested 1.8.5 version.

- The 100KB test in all configurations had its average bandwidth brought down significantly by the 10K concurrency run. It is not very clear what the ‘receive’ and ‘exceptions’ fields in the ab output indicate, but I suspect these are contributing factors. During further testing I would be curious to find out if there is a concurrency parameter between 1000 and 10,000 that would result in no errors in the output.

- The 1MB/10K concurrency test through the ERL, while it returned >900Mbit in throughput, failed more HTTP requests than it completed. What is interesting is that there is nothing in the nginx error log on the laptop to indicate a failed response on the server side, and a brief packet capture didn’t return any non-200 status codes for responses.

Tweaking and Tuning the Test

- sysctl parameters could likely use some additional tweaking for the two systems. The original Ars article didn’t document each option and while I trust Jim’s parameters, there may be something more we can do with the 16GB of RAM in the test clients.

- Consider changing the nginx web root where the .jpg files are stored to a ramdisk, to avoid the risk of the webserver process having to repeatedly read from the SSD. Of course, nginx may already be caching these files in memory; I could look at iotop during the ab run to see what disk access patterns look like.

- Consider if there is a better way to simulate NNTP and BitTorrent downloads rather than HTTP traffic, because that’s really what people are doing with gigabit-to-the-home on the downstream end. NNTP traffic, for example, generally looks like TLS inside TCP. For most copyright-infringing purposes, also requires the client to reassemble yEncoded chunks – so there is a CPU impact on the client that is not necessarily present with straight TCP + HTTP. It would be interesting to come up with a “minimum system requirement” to be able to download and reasonably process NNTP data at 1000Mbit line rate.

- Consider varying contents of the data in each file downloaded – that is, a performant enough server should be able to spew out different data content

Outstanding Questions

- The netdata graphs presented in the latest Ars article do not seem to match mine with respect to width of each segment. Given that the filesizes are changing during each test (so obviously there will be more data and packets transferred in the 1MB test, which will take more time on the horizontal axis), I’m curious as to what causes this difference.

- I have concerns about the GS108T and whether it is causing drops during the testing; I’ll have to bring in several switches and re-run the tests.

- Unrelated, but I also happened to notice the netdata statistics were indicating TCP errors and handshakes when the desktop was plugged into a different switch on my main home network segment, despite ethtool and ifconfig not indicating any issues on the interface. This concerns me; I’m wondering if there is a misbehaving device on the LAN and if I can isolate it with packet captures or unplugging sections of the network until the problems disappear.

I am currently using a Ubiquiti Edge Router POE and I would like to set up a dual stack configuration with Comcast as my ISP provider. The problem is that I do not know how to do this and I could use some precise instructions. Can anybody help?

Very interesting. Not sure what’s up with the widths of the peaks in your tests, aside from (obviously) your ab runs aren’t actually running for the same amounts of time. It looks to me – assuming I’m reading the timestamps correctly on your netdata graphs – like only your 1M runs are actually running for the 180 seconds specified; without digging into your raw results I don’t know what’s going on there. It’s *possible* that you’re crashing ab out entirely on the smaller runs; particularly the 10K runs, which seem to only be running for a few seconds apiece.

I’m as surprised as you are that you got numbers like that out of your ERL. UBNT assured me that the USG was identical under the hood to the ERL. I haven’t actually tested an ERL in quite a while, but when I bought one on their first release back in the day, it performed pretty similarly to what I saw out of the USG I tested in the article. With that said, I saw some pretty big changes from firmware version to firmware version, and I do know that people at UBNT were looking. Maybe they did make more, big changes?

AHA! Your ab runs are terminating at 50,000 requests. I think the arguments for ab may have changed with the version included in 16.04’s apache-utils; you may need to manually specify something utterly ludicrous like a kajillion total requests in order to get it to continue until your time limit expires.

try syntax like this:

ab -rt180 -c10 -s 20 -n 99999999 http://192.168.99.101/10K.jpg

Worked for me just now, on 16.04. Ran for the prescribed 180 seconds. 725.7 Mbps (as reported by ab; would be a bit higher on netdata since it would include packet overhead). This was across my Prosafe GS116 switch.