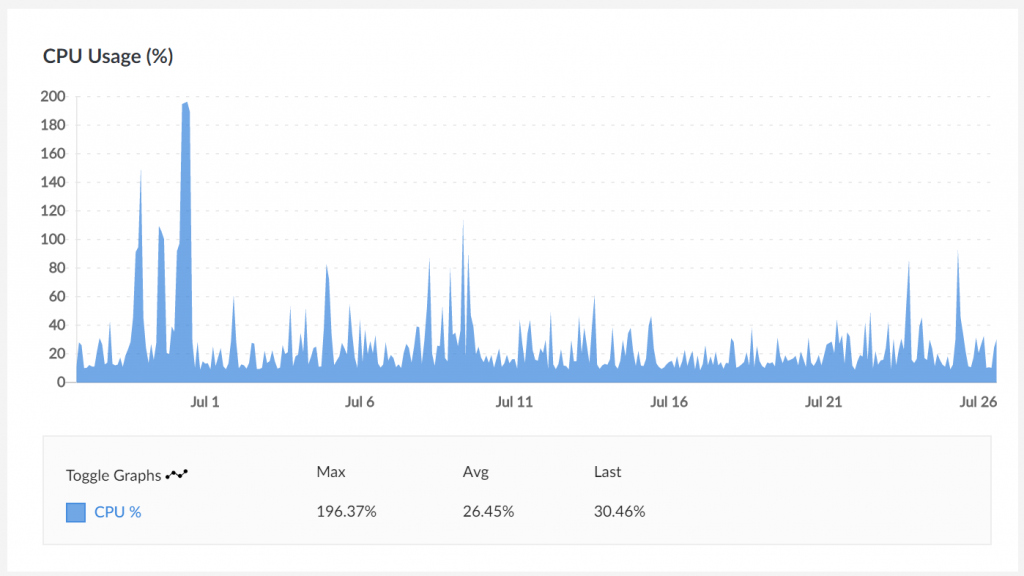

I’ve been trying desperately to catch up on my personal email these past couple months, since it’s rare (with the addition of two babies to our family) to have large uninterrupted blocks of time in which to hack. One of the recurring messages has been a “high CPU” notice from Linode every few days. In my experience this can mean a variety of things, ranging from “your site got quite a few visitors in a short timeframe” to “the backup process is going wonky” to “someone hacked your box and is trying to use it to mine cryptocurrency.”

Rather than put a whole bunch of time into investigating the root cause, I know the system needs an entire OS upgrade and we’re running a bunch of services that are no longer in use like IRC and Jabber servers – these have been replaced, at the cost of our freedom-as-in-speech, with Slack.

So, in the spirit of “cattle, not pets”, my goal is to decommission the Linode VM and move into AWS, and automate as much as I can while doing it. Having the suite of services all in one place is ideal even on a $20/month budget, and there are a number of services like Lambda, IAM, Parameter Store and DynamoDB where I could make good use of them and never pay anything directly.

Many of the people I support with web hosting aren’t willing or able to give up WordPress, so we’ll have to maintain that capability, but I’d also like a migration path for myself to a static site generator that publishes to S3/CloudFront. The best server is one you don’t have to run yourself.

On one hand, Control Tower

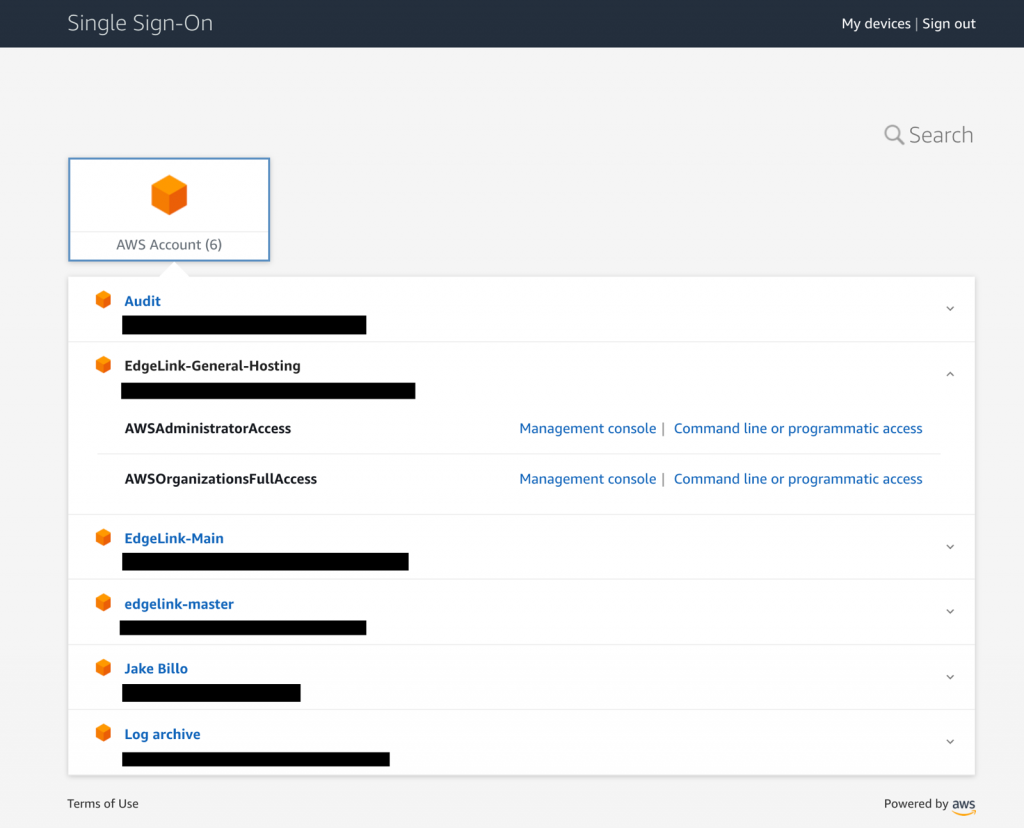

Enter Control Tower, which is one of the more enterprise-y services AWS offers, but surprisingly not at an exorbitant price. It’s a managed way to ensure account compliance and heavily leans on Organizations, SSO, Config, CloudTrail, Service Catalog and CloudFormation (stacks and StackSets) to actually carry out its work.

The biggest cost to me so far has been Config rule creations and evaluations, which added up to $4.76US for my first partial month in June (with four account creations and a couple missteps) but is sitting at $0.12US for July.

SSO, Organizations and the CloudFormation pieces are effectively free. If you’ve never played with AWS SSO, I also highly recommend it – it gives you a landing page similar to Okta where you can assume roles into your authorized AWS accounts for both console and CLI/API access.

Since having separate accounts is effectively the highest level of separation you can get, my idea with Control Tower is to run separate accounts for each tenant that may want to take control of their own services and billing eventually.

I’m not sold on what Config does for its price, but service control policies, CloudFormation-managed VPC definitions, the Account Factory strategy and CloudTrail everywhere are effectively the only way you can effectively maintain a secure, multi-account lifecycle. If anyone from AWS is listening, I’d love a way to use Control Tower with only SCP-based guardrails, and just accept the lack of Config. I may try and hack this solution myself at some point since it’s definitely not best practice.

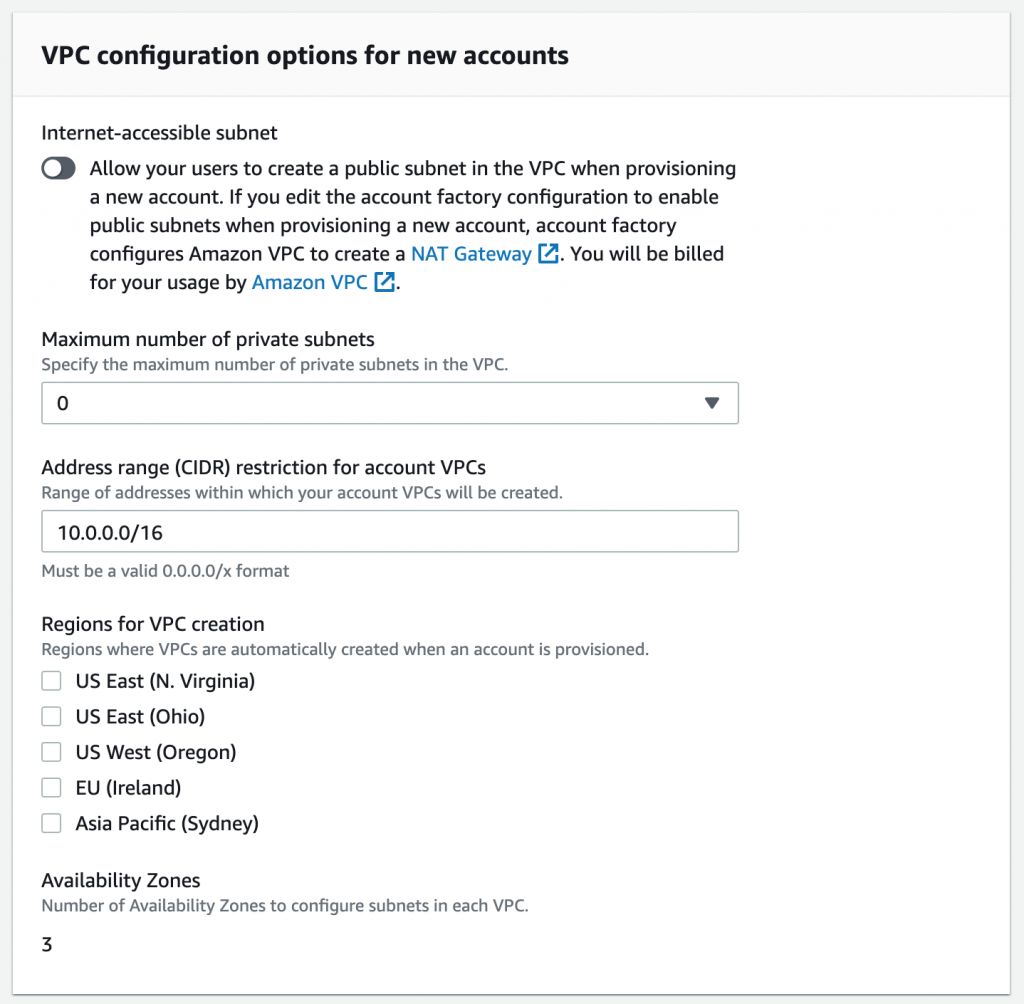

If you decide to experiment with Control Tower yourself, I recommend disabling the public subnet, private subnet, and “regions for VPC creation” options in the Account Factory setup:

These settings should prevent the creation of managed NAT Gateways (with a hourly usage charge of $0.45 US, not including any data you put through them.) They are also created per region that you select for VPC creation. I did this when provisioning my first couple of accounts and caught it after a couple hours, but even after updating the StackSet in the master account with what I thought were appropriate parameters to remove the resources, the gateways still remained.

If you want, you’ll have to go back in and create default VPCs in each of the above regions for each new account – the provisioning process removes them from the five above but not from other regions, like Canada (Central).

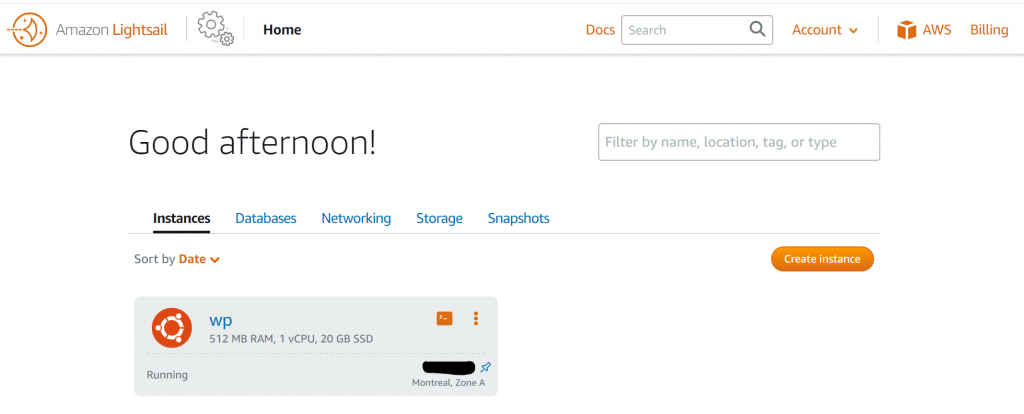

Low-end Lightsail

In what might be considered the polar opposite of service offerings to Control Tower, Amazon Lightsail is the other piece of the puzzle I’ve been experimenting with. It’s AWS’ answer to Linode, Digital Ocean, OVH and other VPS providers. In this market, you pay for a VM with a certain amount of disk, a static IPv4 address, some included bandwidth, and perhaps some DNS management capabilities.

Linode and Digital Ocean are reputable providers in this space and have expanded their offerings beyond VMs to include things like block storage, load balancers or a managed Kubernetes control plane at additional cost. Assume you’re probably spending $5/month for a Linux VM with a gig of RAM, 25GB storage, 1 vCPU of whatever their v measurement is and 1TB Internet data transfer.

For those familiar with AWS capabilities and pricing, Lightsail is interesting because it has some inclusions over vanilla EC2 instances to bring it in line with the above “developer cloud” providers. This makes the pricing much more predictable and transparent compared to the “simple” monthly calculator.

Ignoring the 12-month free tier, you could run a t3a, t3 or t2.micro instance in EC2, but already those are $6.80 to $8.47 monthly without reserving them or committing to a Savings Plan. You’re then paying $0.10US/GB/month for a gp2 SSD-based EBS volume, so kick in another $2.50 monthly on your bill for 20GB disk.

AWS’ outbound data charges are also well-known to be entirely convoluted, but for the sake of this argument let’s assume you’re running the instance in a public subnet, sending more than 1GB and less than 10TB to the Internet in a month. Starting $0.09/GB in the cheapest regions, to use 1TB comes out to just over $92US in AWS – and that would be included in the $5 monthly fee for both Linode and Digital Ocean.

Lightsail has a much more user-friendly pricing structure if you’re willing to live with some limitations. You give up per-second billing for hourly granularity, but get 20GB EBS storage, 1TB bandwidth and three DNS zones (which would be $1.50/month in Route53 proper) with the smallest “nano” $3.50US/month plan.

A quick tour

Lightsail also uses a drastically different console interface than the rest of AWS, even when compared to the “new” and “old” designs that you might see in the EC2 or VPC consoles:

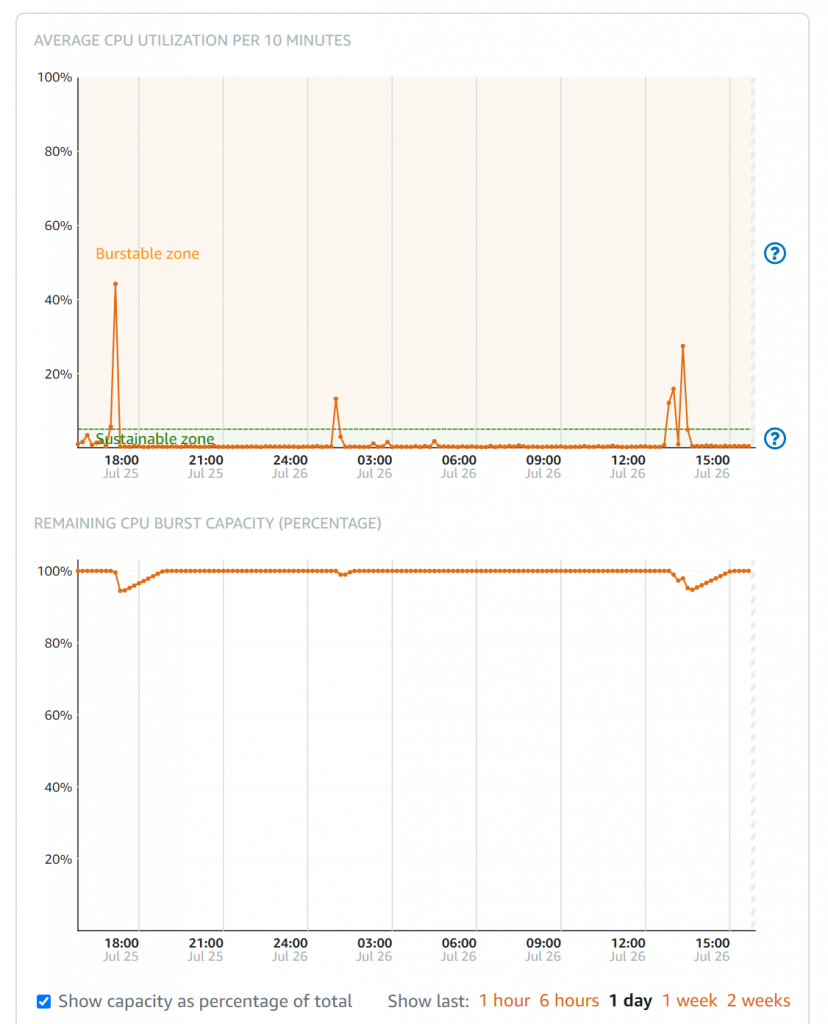

There’s quite a friendly interface for visualizing CPU usage and how the burst capacity works. Select the three-dot menu for your instance, choose Manage and then pick the Metrics tab:

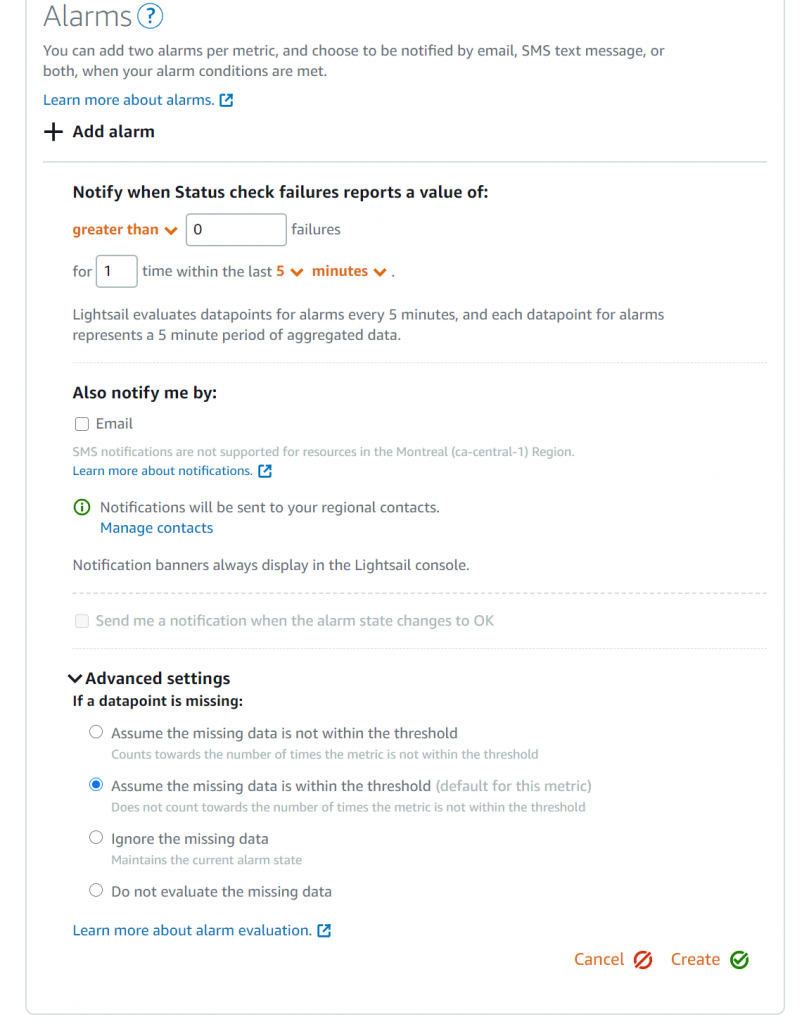

There are also network views and status check views, which is good because even though these are clearly CloudWatch metrics for an instance, you don’t have any access to them through the CloudWatch console. Another interesting capability here is the ability to set up to two CloudWatch alarms per metric, again within the Lightsail console only and with no direct SNS access:

A real t2, but not in your own account

AWS obscures the true underpinnings of Lightsail a little bit. I assume this is an effort to distinguish the service from EC2, as well as capture the set of developers who don’t want to learn about the nuances of burst credits and ephemeral vs. block storage, and just want a VM with some disk and network. Indeed, the Lightsail FAQ explicitly mentions “burstable performance instances” with similar language to the EC2 FAQ, but is never clear as to if it’s really a t2 behind the scenes.

If you compare the RAM and vCPU specs on each of the plans, they line up fairly closely with the same t2-class instances – so the lowest end $3.50/month plan maps to a t2.nano, the $5/month plan a t2.micro, and so on from there – culminating in $160/month for a Lightsail-badged t2.2xlarge. Indeed, if you poke around with the Lightsail API, the GetBundles call returns a response with an instanceType element that reflects this mapping as well.

But to truly prove if a Lightsail instance walks, talks and quacks like an EC2 instance, you can query the instance metadata service once you have shell access:

curl http://169.254.169.254/latest/meta-data/instance-typedutifully comes back with t2.nano on the $3.50 per month plan.

But now that we know it’s an EC2 instance, we can get other attributes like ami-id. In ca-central-1 on a Ubuntu 18.04, my sample instance comes back with ami-0427e8367e3770df1 – the public, official build from 2018-09-12, so it’s not using “special” AMIs.

Reviewing the security-groups metadata returns a ps-adhoc-22_443_80 entry reflecting the ports I’ve allowed in from the Internet at large, as well as two more: Your Parkside Resources and Ingress from Your Parkside LoadBalancers. Perhaps “Parkside” is the Lightsail service codename?

The last metadata item I poked at was iam/info, which returned the following:

{

"Code" : "Success",

"LastUpdated" : "2020-07-26T20:26:24Z",

"InstanceProfileArn" : "arn:aws:iam::956326628589:instance-profile/AmazonLightsailInstanceProfile",

"InstanceProfileId" : "AIPA55KMCJTWZI2WL3WI5"

}For good measure, I then ran aws sts get-caller-identity (which is an STS API call that always goes through) from the AWS CLI and got back:

{

"UserId": "AROA55KMCJTWRW2HIHLEG:i-00b79bdc9e2156f8f",

"Account": "956326628589",

"Arn": "arn:aws:sts::956326628589:assumed-role/AmazonLightsailInstanceRole/i-00b79bdc9e2156f8f"

}Note the the account ID 956326628589 is not one of the accounts in my organization – so what I think is actually happening here is that a Lightsail instance is indeed just a t2-class EC2 instance running in an Amazon-managed account. I also started Lightsail instances in us-east-1 and us-east-2 and confirmed that this account ID stays the same.

This has got to be really interesting to sort out on the AWS side in terms of how they bill customers (perhaps also contributing to the hourly granularity), but also makes sense as to why you can’t actually see the true instance, IAM resources, CloudWatch metrics or ENIs from console, API or CLI.

It’s also in the same vein as authorizing Classic|Application Load Balancer logs to be written to an S3 bucket in your account – you have to allow one of the predefined AWS account IDs (127311923021 for us-east-1, 797873946194 for us-west-2, 985666609251 for ca-central-1) in the bucket policy to be able to have logs written to your own bucket. They keep adding account IDs for new regions to the ELB list, so I expect this is a pattern that persists despite the introduction of service-linked roles or the service principal used in NLB logging.

Next up

I did successfully manage to move my own website to Lightsail – and honestly, the nano size is performing quite well despite its 512MB footprint, so the next process will be continuing to document the relevant Terraform and Ansible code to be able to provision a new instance from scratch, and restore its content if it were to get terminated.

While I’m experienced with CloudFormation, Terraform is somewhat necessary for doing anything close to Infrastructure as Code with Lightsail – the only CloudFormation reference with Lightsail is when you go to export a snapshot to EC2. I have several complaints about Terraform, specifically around a couple of fairly-reasonable pull requests for Lightsail resources that have just sat there for a while, but that’s a whole separate post best illustrated with code examples.

I’d also like to poke around a little bit more with VPC peering; this is another area where I assume the service limits have been raised on the AWS side, and to find out exactly how isolated my Lightsail instances are from other people’s.

With this move, I also have a few feature requests for the Lightsail team:

- CloudFormation support for all resources! Please!

- Ability to customize policy attached to the role/instance profile. The use case is to grant the instance direct access to read from/write to an S3 bucket rather than using an IAM access key. Or to be able to use DynamoDB, or Parameter Store, or invoking Lambda functions directly.

- I recognize this might be difficult, especially in light of the underlying instance running in a different account…

- Maybe one could allow the Lightsail instance to assume a role in our own accounts for this purpose…

- Ubuntu 20.04 and Amazon Linux 2 “blueprints”

- Move to, or choice of t3 or t3a-backed instances which have much better network performance

- S3 and DynamoDB gateway VPC endpoints

- Graviton (ARM) instance support would be absolutely fascinating for this use case (Linux server running nginx/PHP/MySQL), although likely dependent on an entirely theoretical “t4g.nano” EC2 instance

But for now, I leave the Internet to see if we can exhaust the burst credit balance on this thing!

t4g.nano is not theoretical anymore. https://aws.amazon.com/blogs/aws/new-t4g-instances-burstable-performance-powered-by-aws-graviton2/

😉